Generative models are powerful at solving many types of problems. However, they are constrained by limitations like:

- They are frozen after training, leading to stale knowledge.

- They can't query or modify external data.

Function calling can help you overcome some of these limitations. Function calling is sometimes referred to as tool use because it allows a model to use external tools such as APIs and functions to generate its final response.

You can learn more about function calling in the Google Cloud documentation, including a helpful list of use cases for function calling.

Function calling mode is supported by all Gemini models (except for Gemini 1.0 models).

This guide shows you how you might implement a function call setup similar to the example described in the next major section of this page. At a high-level, here are the steps to set up function calling in your app:

Write a function that can provide the model with information that it needs to generate its final response (for example, the function can call an external API).

Create a function declaration that describes the function and its parameters.

Provide the function declaration during model initialization so that the model knows how it can use the function, if needed.

Set up your app so that the model can send along the required information for your app to call the function.

Pass the function's response back to the model so that the model can generate its final response.

Overview of a function calling example

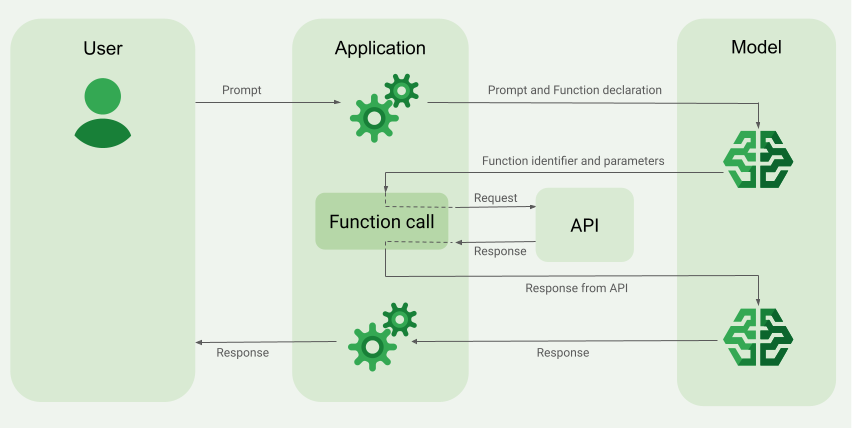

When you send a request to the model, you can also provide the model with a set of "tools" (like functions) that it can use to generate its final response. In order to utilize these functions and call them ("function calling"), the model and your app need to pass information back-and-forth to each other, so the recommended way to use function calling is through the multi-turn chat interface.

Imagine that you have an app where a user could enter a prompt like:

What was the weather in Boston on October 17, 2024?.

The Gemini models may not know this weather information; however, imagine that you know of an external weather service API that can provide it. You can use function calling to give the Gemini model a pathway to that API and its weather information.

First, you write a function fetchWeather in your app that interacts with this

hypothetical external API, which has this input and output:

| Parameter | Type | Required | Description |

|---|---|---|---|

| Input | |||

location |

Object | Yes | The name of the city and its state for which to get the weather. Only cities in the USA are supported. Must always be a nested object of city and state.

|

date |

String | Yes | Date for which to fetch the weather (must always be in

YYYY-MM-DD format).

|

| Output | |||

temperature |

Integer | Yes | Temperature (in Fahrenheit) |

chancePrecipitation |

String | Yes | Chance of precipitation (expressed as a percentage) |

cloudConditions |

String | Yes | Cloud conditions (one of clear, partlyCloudy,

mostlyCloudy, cloudy)

|

When initializing the model, you tell the model that this fetchWeather

function exists and how it can be used to process incoming requests, if needed.

This is called a "function declaration". The model does not call the function

directly. Instead, as the model is processing the incoming request, it

decides if the fetchWeather function can help it respond to the request. If

the model decides that the function can indeed be useful, the model generates

structured data that will help your app call the function.

Look again at the incoming request:

What was the weather in Boston on October 17, 2024?. The model would likely

decide that the fetchWeather function can help it generate a response. The

model would look at what input parameters are needed for fetchWeather and then

generate structured input data for the function that looks roughly like this:

{

functionName: fetchWeather,

location: {

city: Boston,

state: Massachusetts // the model can infer the state from the prompt

},

date: 2024-10-17

}

The model passes this structured input data to your app so that your app can

call the fetchWeather function. When your app receives the weather conditions

back from the API, it passes the information along to the model. This weather

information allows the model to complete its final processing and generate its

response to the initial request of

What was the weather in Boston on October 17, 2024?

The model might provide a final natural-language response like:

On October 17, 2024, in Boston, it was 38 degrees Fahrenheit with partly cloudy skies.

Implement function calling

Before you begin

If you haven't already, complete the

getting started guide, which describes how to

set up your Firebase project, connect your app to Firebase, add the SDK,

initialize the Vertex AI service, and create a GenerativeModel instance.

The remaining steps in this guide show you how to implement a function call setup similar to the workflow described in Overview of a function calling example (see the top section of this page).

You can view the complete code sample for this function calling example later on this page.

Step 1: Write the function

Imagine that you have an app where a user could enter a prompt like:

What was the weather in Boston on October 17, 2024?. The Gemini models may not

know this weather information; however, imagine that you know of an external

weather service API that can provide it. The example in this guide rely on this

hypothetical external API.

Write the function in your app that will interact with the hypothetical external

API and provide the model with the information it needs to generate its final

request. In this weather example, it will be a fetchWeather function that

makes the call to this hypothetical external API.

Step 2: Create a function declaration

Create the function declaration that you'll later provide to the model (next step of this guide).

In your declaration, include as much detail as possible in the descriptions for the function and its parameters.

The model uses the information in the function declaration to determine which function to select and how to provide parameter values for the actual call to the function. See Additional behaviors and options later on this page for how the model may choose among the functions, as well as how you can control that choice.

Note the following about the schema that you provide:

You must provide function declarations in a schema format that's compatible with the OpenAPI schema. Vertex AI offers limited support of the OpenAPI schema.

The following attributes are supported:

type,nullable,required,format,description,properties,items,enum.The following attributes are not supported:

default,optional,maximum,oneOf.

By default, for Vertex AI in Firebase SDKs, all fields are considered required unless you specify them as optional in an

optionalPropertiesarray. For these optional fields, the model can populate the fields or skip them. Note that this is opposite from the default behavior for the Vertex AI Gemini API.

For best practices related to the function declarations, including tips for names and descriptions, see Best practices in the Google Cloud documentation.

Here's how you can write a function declaration:

Step 3: Provide the function declaration during model initialization

The maximum number of function declarations that you can provide with the

request is 128. See

Additional behaviors and options

later on this page for how the model may choose among the functions, as well as

how you can control that choice (using a toolConfig to set the

function calling mode).

Learn how to choose a model and optionally a location appropriate for your use case and app.

Step 4: Call the function to invoke the external API

If the model decides that the fetchWeather function can indeed help it

generate a final response, your app needs to make the actual call to that

function using the structured input data provided by the model.

Since information needs to be passed back-and-forth between the model and the app, the recommended way to use function calling is through the multi-turn chat interface.

The following code snippet shows how your app is told that the model wants to

use the fetchWeather function. It also shows that the model has provided the

necessary input parameter values for the function call (and its underlying

external API).

In this example, the incoming request contained the prompt

What was the weather in Boston on October 17, 2024?. From this prompt, the

model inferred the input parameters that are required by the fetchWeather

function (that is, city, state, and date).

Step 5: Provide the function's output to the model to generate the final response

After the fetchWeather function returns the weather information, your app

needs to pass it back to the model.

Then, the model performs its final processing, and generates a final

natural-language response like:

On October 17, 2024 in Boston, it was 38 degrees Fahrenheit with partly cloudy skies.

Additional behaviors and options

Here are some additional behaviors for function calling that you need to accommodate in your code and options that you can control.

The model may ask to call a function again or another function.

If the response from one function call is insufficient for the model to generate its final response, then the model may ask for an additional function call, or ask for a call to an entirely different function. The latter can only happen if you provide more than one function to the model in your function declaration list.

Your app needs to accommodate that the model may ask for additional function calls.

The model may ask to call multiple functions at the same time.

You can provide up to 128 functions in your function declaration list to the model. Given this, the model may decide that multiple functions are needed to help it generate its final response. And it might decide to call some of these functions at the same time – this is called parallel function calling.

Your app needs to accommodate that the model may ask for multiple functions running at the same time, and your app needs to provide all the responses from the functions back to the model.

You can control how and if the model can ask to call functions.

You can place some constraints on how and if the model should use the provided function declarations. This is called setting the function calling mode. Here are some examples:

Instead of allowing the model to choose between an immediate natural language response and a function call, you can force it to always use function calls. This is called forced function calling.

If you provide multiple function declarations, you can restrict the model to using only a subset of the functions provided.

You implement these constraints (or modes) by adding a tool configuration

(toolConfig) along with the prompt and the function declarations. In the tool

configuration, you can specify one of the following modes. The most useful

mode is ANY.

| Mode | Description |

|---|---|

AUTO |

The default model behavior. The model decides whether to use a function call or a natural language response. |

ANY |

The model must use function calls ("forced function calling"). To limit

the model to a subset of functions, specify the allowed function names in

allowedFunctionNames.

|

NONE |

The model must not use function calls. This behavior is equivalent to a model request without any associated function declarations. |

What else can you do?

Try out other capabilities

- Build multi-turn conversations (chat).

- Generate text from text-only prompts.

- Generate text from multimodal prompts (including text, images, PDFs, video, and audio).

Learn how to control content generation

- Understand prompt design, including best practices, strategies, and example prompts.

- Configure model parameters like temperature and maximum output tokens (for Gemini) or aspect ratio and person generation (for Imagen).

- Use safety settings to adjust the likelihood of getting responses that may be considered harmful.

Learn more about the supported models

Learn about the models available for various use cases and their quotas and pricing.Give feedback about your experience with Vertex AI in Firebase